A guide for thoughtful doctors who want smarter tools—without giving up their clinical instincts.

(This is part 2 of a short series that began last week with: AI in Medicine)

Table of Contents

- How to Use AI in Medicine Without Losing Your Clinical Soul

- The Temptation and the Risk

- Rule #1: Think with AI, Not through It

- Rule #2: Ask Better Questions, Not Just Faster Ones

- Rule #3: Translate AI Back to Human

- Rule #4: Don’t Let the Machine Flatten the Case

- Rule #5: Use AI as a Roundtable When You’re Alone

- Where the Real Work Still Belongs to You

- 🧪 The Quick-Check Guide: Which AI Tools to Trust?

- Looking Ahead: Smarter, Still Human

- Q1: How should doctors start using AI in medicine?

- Q2: What are the biggest mistakes doctors make when using AI?

- Q3: Can AI help doctors at home with tough cases?

- Q4: How does AI improve patient communication?

- Q5: Does using AI mean doctors will lose their clinical edge?

- Q6: Are there risks to using AI tools in medicine?

- Q7: Which AI tools are most helpful for doctors today?

- Q8: How can clinicians evaluate whether an AI tool is trustworthy?

- Q9: Will AI replace doctors in the future?

- Q10: What’s the biggest benefit of using AI in medicine?

What You’ll Learn in This Post:

1️⃣ How to use AI in medicine without surrendering your clinical instincts

2️⃣ Five core principles that preserve human judgment in an AI-supported system

3️⃣ How to make AI a partner—not a dictator—in clinical care

4️⃣ Tools doctors can use from home to study, reflect, and review cases

5️⃣ How to decide when to trust, question, or override the algorithm

How to Use AI in Medicine Without Losing Your Clinical Soul

The Temptation and the Risk

There’s something seductive about a machine that seems to know.

AI doesn’t get tired. It doesn’t forget the name of that rare syndrome. It doesn’t misplace the lab values or second-guess its memory.

For the overburdened clinician, this can feel like salvation. But also—like surrender.

So here’s the question this blog will ask, and try to answer:

How do we use AI in medicine without becoming its apprentice?

How do we embrace this new thinking partner while keeping our judgment intact?

We do it by choosing our relationship with AI carefully.

Not as a replacement. Not as a threat. But as an assistant—smart, tireless, and often helpful—who works for us, not instead of us.

Rule #1: Think with AI, Not through It

One of the most dangerous habits that can emerge in this new AI era is automation bias—the assumption that if the system suggested it, it must be right.

But AI doesn’t see the patient in front of you. It doesn’t hear their hesitation, note their cultural context, or sense the contradiction between what’s on paper and what’s in the room. It sees patterns—not people.

That’s why your job is not to accept what AI offers. It’s to interrogate it.

Ask:

-

Does this match what I’m observing?

-

Is there a context the algorithm might be missing?

-

What question is this answer assuming I asked?

When AI surfaces an idea you hadn’t considered, that’s a win. But when it confirms what you already suspected? Be careful—it might just be agreeing with your bias.

You are still the one doing medicine. The machine just helps you see your blind spots.

Rule #2: Ask Better Questions, Not Just Faster Ones

AI can generate differentials, suggest labs, and surface literature faster than you can blink. But the quality of what you get depends entirely on what you ask.

Garbage in, garbage out has never been more literal.

If you give it a lazy prompt—“Headache in 40yo?”—you’ll get a generic list.

But if you feed it the story—“Intermittent right-sided headache, pulsatile, worse during menstruation, with photophobia and neck stiffness, no prior history, two recent ER visits without CT”—then you get value.

This applies even more in research.

Ask it to summarize a new trial? It’ll oblige.

Ask it to analyze the statistical assumptions or cross-reference with prior studies? It’ll stretch you—and itself.

Think of AI not as a vending machine for answers, but a Socratic partner: the more thoughtful your input, the more revealing the output.

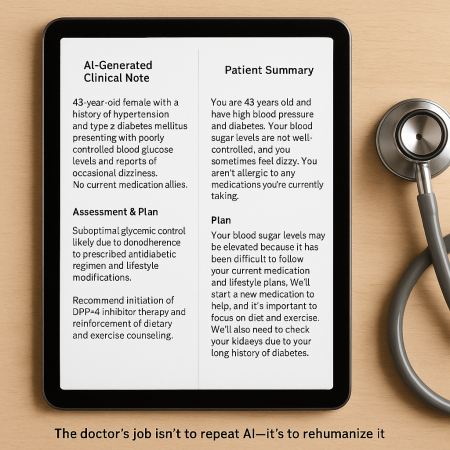

Rule #3: Translate AI Back to Human

Let’s be honest: most AI-generated outputs are… efficient, but not exactly poetic.

You’ve probably seen the summaries: “The patient has a 7-day history of upper respiratory symptoms. Recommend symptomatic care and follow-up.” True. But also devoid of human tone, reassurance, or nuance.

This is where you come in.

AI can generate notes, letters, or patient summaries—but only you can rewrite them in a way that feels relational rather than transactional.

That means:

-

Adjusting tone for a worried patient.

-

Clarifying complexity for a non-clinician.

-

Adding warmth where the machine is sterile.

AI is a translator of logic. But you are the translator of meaning.

When you take a machine-generated summary and reshape it into something a real human can read, remember, and trust—you’re not losing your role. You’re elevating it.

Rule #4: Don’t Let the Machine Flatten the Case

Here’s the real danger with pattern-based tools: they often reward what’s typical. But in medicine, the most important cases are the ones that aren’t.

AI works best with averages. But your job is to find the outlier.

So when the system says: “This looks like a mild viral syndrome,” you ask:

“Okay… but what if it’s not?”

When the algorithm ranks pneumonia as more likely than pulmonary embolism, you ask:

“What about this case makes that assumption shaky?”

If we let the machine flatten everything into curves and confidence intervals, we risk losing the art of medicine—the part that’s attentive to the exception, not the rule.

AI isn’t a prophet. It’s a pattern-spotter. And the best clinicians know when to go off-pattern.

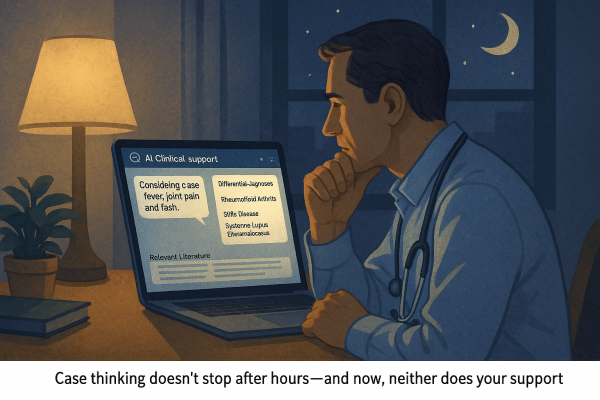

Rule #5: Use AI as a Roundtable When You’re Alone

Some of your best clinical thinking happens after hours—when you’re off the floor, away from the chart, and mentally reworking a case that didn’t sit right.

In the past, this meant Googling, flipping through UpToDate, or texting a colleague.

Today? You have access to independent AI tools that work like a 24/7 peer consult room.

Let’s say you’re turning over a case involving overlapping autoimmune symptoms. You can use:

-

Language models (like GPT-4) to simulate possible differentials, suggest labs to re-check, or propose related rare diagnoses to consider.

-

AI-based literature summarizers like Consensus, ResearchRabbit, or Semantic Scholar to surface relevant studies—not just by keyword, but by meaning and context.

-

New tools like Scite.ai to pull not just articles, but how those articles are cited (supportively or critically), helping you identify consensus vs controversy.

-

Plain-English interfaces to PubMed, powered by natural language AI, so you can literally type: “What are the latest 2024 trials comparing leflunomide and methotrexate in seronegative RA?” and get something useful—fast.

Even more interesting: some tools now digest entire clinical trials, pulling out outcomes, N numbers, limitations, and conflicts of interest—saving you the headache of parsing a 9-page PDF at 11 p.m.

These aren’t gimmicks. They’re scaffolds for your thinking—especially when you don’t have a mentor on speed dial or a hallway consult to lean on.

In that way, AI becomes something rare:

A thinking partner that doesn’t interrupt, doesn’t rush, and doesn’t forget the paper you read two years ago that might now be relevant.

Where the Real Work Still Belongs to You

Here’s the truth most AI evangelists forget to say out loud: AI won’t save you from doing the hard parts.

It can’t sense hesitation behind a patient’s smile.

It doesn’t feel the tension in a room when a patient says they’re “fine” but clearly aren’t.

It doesn’t recognize the gap between what’s medically reasonable and what’s personally possible.

That’s your job. And it always will be.

But what AI can do is this:

It can hold some of the mental weight.

It can challenge your assumptions.

It can point out something you didn’t know you forgot.

And if you use it well—deliberately, skeptically, humanely—it won’t deskill you. It will deepen you.

🧪 The Quick-Check Guide: Which AI Tools to Trust?

Here’s a simple test for any AI tool you’re considering adding to your workflow:

1. Does it help me think better—or just faster?

If it only accelerates a rushed system, skip it. If it helps you see more clearly, it’s worth a look.

2. Can I audit what it’s doing?

Opaque tools that give black-box recommendations without transparency? Approach with caution.

3. Does it reduce my cognitive burden—or add a layer of complexity?

The best AI feels like delegation, not micromanagement.

4. Is it grounded in evidence—or marketing gloss?

Ask for published validation, real-world pilots, or access to peer-reviewed evaluations. Tools built for medicine should withstand a scientific lens.

5. Does it keep the patient at the center?

If it nudges you to treat metrics instead of people, it’s time to pause and recalibrate.

You don’t need to adopt every AI tool that crosses your path. You just need to choose the ones that help you do what you already do well—only more sustainably.

Looking Ahead: Smarter, Still Human

As AI continues to reshape medicine, clinicians are faced with a choice:

Withdraw into skepticism and hold the line… or step forward with clear eyes and firm principles.

This blog has tried to offer a third path: a clinician’s framework for thoughtful adoption.

In my next post, I’m going to try to tackle how AI is already influencing clinical education, research interpretation, and patient self-care behavior—whether or not we’re paying attention.

For now: stay curious, stay grounded, and stay human!

Your judgment still matters.

But you don’t have to carry all of it alone.

FAQs about AI in Medicine

Q1: How should doctors start using AI in medicine?

Start with low-risk, high-utility tools—like documentation assistants or literature summarizers. Use them to reduce workload and increase perspective, not to shortcut judgment. AI is most helpful when treated like a thinking partner, not a decision-maker. Begin in areas where you feel overloaded, not uncertain.

Q2: What are the biggest mistakes doctors make when using AI?

Overtrusting AI outputs (automation bias), skipping verification, and assuming AI is objective. Many tools are trained on biased or incomplete datasets. Use AI to widen your thinking—but always interrogate the output. If it seems too confident or simplistic, that’s a red flag.

Q3: Can AI help doctors at home with tough cases?

Yes. Many AI tools function like virtual colleagues, helping with differentials, summarizing research, and highlighting uncommon diagnoses. These are especially useful for reflection, second-guessing, or double-checking assumptions. It’s like having a digital brain trust that doesn’t get tired.

Q4: How does AI improve patient communication?

AI can help translate complex notes into plain language, generate discharge instructions in multiple languages, and summarize next steps for better recall. But the final delivery still matters—it’s up to the clinician to ensure the message lands clearly and compassionately.

Q5: Does using AI mean doctors will lose their clinical edge?

Only if they stop thinking. In fact, AI often makes doctors sharper by forcing them to double-check assumptions, explore more hypotheses, and back up decisions. It’s not dumbing down care—it’s sharpening it.

Q6: Are there risks to using AI tools in medicine?

Yes—bias, hallucination, over-reliance, and opaque logic are all concerns. That’s why transparency, auditability, and human oversight must always remain central. AI should reduce risk, not replace reasoning.

Q7: Which AI tools are most helpful for doctors today?

Tools that assist with documentation (ambient scribing), literature distillation, case analysis, and multilingual summaries are among the most valuable. Start with ones that free up time without flattening nuance.

Q8: How can clinicians evaluate whether an AI tool is trustworthy?

Look for: peer-reviewed validation, clear sourcing, adjustable parameters, and clinician oversight. Avoid tools that can’t explain their own reasoning or don’t show data sources. The best tools feel like collaborators—not mystery boxes.

Q9: Will AI replace doctors in the future?

Unlikely. AI can enhance pattern recognition and data analysis, but it lacks context, emotional intelligence, and moral judgment. The most valuable clinicians will be the ones who know how to work with AI—not ignore it or fear it.

Q10: What’s the biggest benefit of using AI in medicine?

Relief from cognitive overload. AI doesn’t just help you think faster—it helps you think wider. And that space is where better care begins.