From ink-stained charts to intelligent algorithms, the tools of medicine have changed—and ignoring the biggest one yet might be malpractice.

Table of Contents

- What You’ll Learn in This Post:

- 7 Reasons AI in Medicine Is No Longer Optional

- From Ink to Intelligence: How We Got Here

- When AI Sees What We Don’t: A True-ish Case

- Where AI in Medicine Belongs: Before, During, and After the Visit

- What Happens Without AI in Medicine? The Quiet Cost of Cognitive Isolation

- The Real Risk: Not Using AI Is No Longer Neutral

- Not Every Gap Is About Ignorance—But Some Are About Ego

- AI Skepticism in Medicine: A Thoughtful FAQ for Thoughtful Clinicians

- The Moral Case for AI in Medicine

- A Future Worth Practicing In

- FAQ – AI in Medicine: Good, Great, Bad, Catastrophic?

- Q1: Isn’t AI just another tech trend that’ll fizzle out like Google Glass?

- Q2: How do we know AI isn’t making things worse, just faster?

- Q3: Isn’t it dangerous to rely on AI for diagnosis?

- Q4: Doesn’t AI kill the art of medicine?

- Q5: Can’t doctors just think for themselves? Isn’t that what they’re trained for?

- Q6: What if AI disagrees with me? Should I be worried or offended?

- Q7: Will AI deskill the next generation of doctors?

- Q8: Aren’t you just hyping this because it sounds futuristic and fancy?

- Q9: What’s the risk of not using AI in medicine?

- Q10: Be honest—does using AI make me a better doctor?

What You’ll Learn in This Post:

❇️ How the history of medical tools paved the way for today’s AI breakthroughs

❇️ Why AI in medicine isn’t science fiction—it’s clinical reality, and must NOT be brushed aside

❇️ A real story where AI caught what even smart doctors missed

❇️ What doctors who don’t use AI might be missing—and why it matters

→ A preview of next steps: advice for clinicians on working with AI without losing their edge (in a few days, publishing a guide for clinician!)

7 Reasons AI in Medicine Is No Longer Optional

From Ink to Intelligence: How We Got Here

Medicine has always been a tool-using profession. The stethoscope. The ophthalmoscope. The humble pen. Each new tool helped extend our reach—sometimes literally, sometimes metaphorically. But none of them rewrote the limits of human cognition like what we’re seeing today with AI in medicine.

To understand how big this shift is, I want to take a quick look behind us.

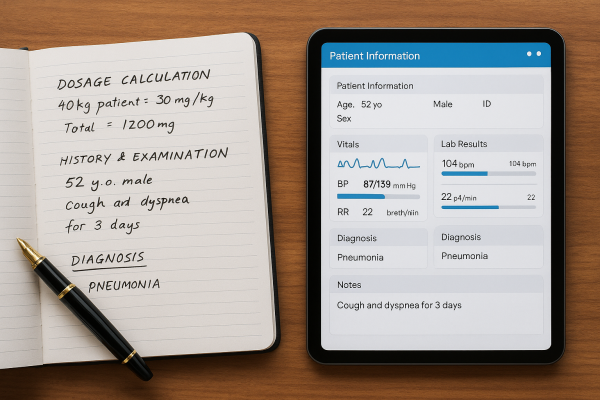

Not so long ago, prescribing medication meant scribbling dosages in longhand and doing rough math in your head—or on a napkin if you were honest. Hospital dosing errors weren’t rare; they were baked into the system. Then came calculators. Then standardized protocols. Then dosing software.

Each advance felt awkward or intrusive at first—some even feel like a challenge (or subtle threat) to the great healthcare provider expertise. And yet, with each step, error rates dropped, lives were saved, and doctors like me have found time to think bigger.

The same thing happened with records. For decades, physicians documented their thoughts in handwriting so inscrutable it became its own cultural trope. I’m old enough to have written hand-written notes in charts, I asked for help reading old doctors’ handwriting, and squinted long enough at paper charts to just shrug and move on with seeing patients. If you wanted to see what another specialist thought, good luck. EMRs were messy at first (still almost insurmountable to docs of a certain age), but they brought order, collaboration, and the first glimpses of medicine as a connected, team-based endeavor.

AI is the next evolution of that same pattern. But the leap is so much greater.

This isn’t just about faster math or legible records. It’s about thinking—not replacing it, but scaffolding it in ways the human brain alone never could.

Let me describe to you what I mean.

When AI Sees What We Don’t: A True-ish Case

A few months ago, a 44-year-old woman—let’s call her Mara—came in with an odd cluster of symptoms: low-grade fevers, intermittent rash, joint pain, and a vague sense of “just not feeling right.” She’d seen three doctors, had normal basic labs, and was labeled, kindly, as having a “stress reaction.”

But Mara’s case had been preloaded into a diagnostic support system that I built, which ran large-scale pattern matching across her history, symptoms, and obscure lab trends—even those not flagged by the EMR.

The AI flagged a possibility that hadn’t occurred to anyone: Adult-onset Still’s disease. Rare. Systemic. Easily mistaken for a mood disorder, a viral syndrome, or vague autoimmune fatigue.

A targeted ferritin test confirmed the diagnosis. She was started on the right treatment the same week.

Now, no machine deserves a thank-you card. It didn’t comfort Mara or convince her that her symptoms were real. But it did widen the lens. It suggested a path none of us were trained to see, and none of her providers did catch.

That’s what AI in medicine does best. It sees patterns at scale. Not perfectly. Not always reliably. But often enough—and early enough—that ignoring it becomes harder to defend.

Where AI in Medicine Belongs: Before, During, and After the Visit

So what exactly does AI do in the real world of clinical care?

Let’s set aside the robots and retinal scans for a moment. Most of the meaningful work AI is doing today doesn’t feel futuristic. It feels like subtle, behind-the-scenes competence—the kind that quietly strengthens every step of a medical interaction without drawing too much attention to itself.

It starts before the visit even begins.

Before the Visit: Smart Intake and Contextual Intelligence

The usual routine? A patient checks in, fills out a form, and the doctor skims it while opening the exam room door.

The AI-enhanced version? The system already knows the patient has a family history of early cardiac disease, a recent spike in blood pressure logged through their smartwatch, and three prior urgent care visits for chest “discomfort.” It synthesizes that info and suggests additional questions or labs to consider. Not as commands—just as reminders. Smart nudges.

It’s not replacing the physician’s insight. It’s adding dimensionality. And a second set of “eyes” to make sure subtle details are still being considered, or not overlooked.

Now imagine this scaled across a population. AI tools can flag patients overdue for screening, predict who’s likely to miss appointments, and even draft personalized outreach messages—all before the patient shows up. That’s not cold automation. That’s preemptive compassion.

During the Visit: Smarter Questions, Safer Medicine

Doctors have about seven minutes per patient in many clinics. During that time, we’re supposed to review the chart, ask detailed questions, perform an exam, document the encounter, place orders, handle billing codes, and—if there’s time—make eye contact.

AI doesn’t fix the broken system. But it does lighten the overload.

It can propose differential diagnoses based on the patient’s complaints and history—pulling from thousands of similar cases and current literature. It doesn’t hand over “the answer.” But it suggests what’s worth considering. And to save time and energy – these suggestions can even be ranked by statistical likelihood.

It can flag potential drug interactions on the fly—not just the textbook ones, but the odd, niche combos that might never cross a clinician’s radar.

And perhaps most importantly, it can help ask better questions, especially in areas where human bias or fatigue might otherwise narrow the frame.

Example? When a patient with abdominal pain and weight loss presents to a busy urgent care, an AI system might quietly prompt: “Family history of colon cancer? Recent change in bowel habits? Anemia?”—nudging the doctor toward the right line of thinking, even if only as a safety net.

After the Visit: From Chaos to Clarity

This is where AI’s value really starts to sing—though not in a flashy way.

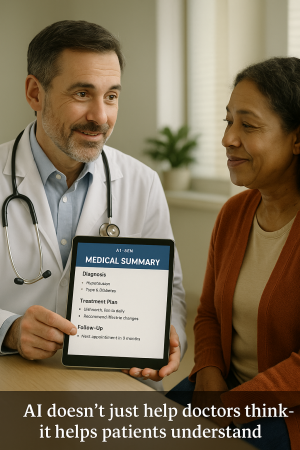

It helps with documentation: drafting notes, letters, and summaries in seconds rather than minutes. But better than speed, it translates.

It can take a clinical note and repackage it into patient-friendly language: “Here’s what we discussed. Here’s what to do next. Here’s what to watch for.” Written in plain English, or even another language, if needed.

It can also help coordinate care—flagging abnormal labs that need follow-up, reminding clinicians about delayed imaging, or syncing communication across specialties who may not otherwise have spoken.

In short, AI doesn’t just tidy up the leftovers after a visit. It helps make sure the visit doesn’t end in ambiguity, lost tasks, or vague instructions.

What Happens Without AI in Medicine? The Quiet Cost of Cognitive Isolation

Let’s be honest: no doctor wants to be told they’re missing something.

We train for years to sharpen our thinking, trust our instincts, and refine our clinical judgment. But even the sharpest minds are still human—capable of fatigue, tunnel vision, cognitive bias, or simply not knowing what they don’t know.

Without AI in medicine, physicians face every clinical decision armed only with:

1️⃣ Their training (which may be years out of date),

2️⃣ Their memory (which is not as limitless as we pretend),

3️⃣ Their experience (which is always, by definition, incomplete), and

4️⃣ Their physical bandwidth (which, by the third patient of the day, is already strained).

It’s not that doctors can’t make good decisions alone. It’s that even great decisions can get better with backup.

Especially when the backup doesn’t get tired. Or hungry. Or interrupted mid-thought by a pager, an EHR glitch, or a child’s daycare call.

The Real Risk: Not Using AI Is No Longer Neutral

Avoiding AI isn’t a neutral choice anymore. It’s a decision with consequences.

If a tool exists that can help flag an otherwise missed diagnosis, surface alternative treatment paths, reduce documentation errors, or translate medical speak into patient understanding—and you choose not to use it? That’s no longer about preference. That’s about performance.

Which patient wouldn’t want their doctor to be better informed? Considering more nuances and details of their history? Paying focused attention to every last detail?

And performance, in medicine, isn’t graded on effort. It’s graded on outcomes.

We don’t fault a pilot for using an autopilot system. We expect them to. We trust them to know when to override it—and when to lean in. The best doctors of today are facing a similar shift. And whatever magic AI can help hush the turbulence of a bumpy flight? Who’s complaining about that benefit?

In fact, avoiding AI may soon start to look like malpractice—not in a legal sense (yet), but in the quiet, professional sense of not bringing everything you could to the patient in front of you. I now routinely recommend that my patients ask AI questions about their symptoms or diagnoses, whether or not their doctor is comfortable with it. Here’s the article I wrote about that just recently.

Not Every Gap Is About Ignorance—But Some Are About Ego

This is difficult to really say aloud, but it’s true: some resistance to AI comes from the sense that if a machine helps, it’s cheating. Or it’s impersonal. Or it’s just “not how we do things.” I can still hear the older docs from my training days peacocking their chests, moaning about technology and the loss of patient connection!

But refusing to use AI because it challenges your self-image is like refusing to use a calculator because you were good at long division in the 8th grade.

We are better when we’re supported by tools that let us think more, not less. The goal of medicine is not to prove how much you can hold in your head. The goal is to help people—accurately, kindly, and with minimal harm.

If AI in medicine helps us get closer to that ideal? It’s time to set aside the pride and pick up the tool.

Internal Links

🔗 Data-Driven Care at CED Clinic

🔗 Cai and Lila – CED Clinic’s AI agents

🔗 Upgrade Your Health: How to Use AI to Transform Your Medical Journey

🔗 Can AI Change Your Health Forever? Here’s How

AI Skepticism in Medicine: A Thoughtful FAQ for Thoughtful Clinicians

Let’s not sugarcoat it—AI makes a lot of doctors uneasy.

Some are worried about accuracy. Others about losing touch with patients. Still others about a profession that’s already stretched thin becoming more automated, impersonal, or, worst of all, irrelevant.

These are not irrational fears. They’re valid concerns rooted in real experience. So let’s talk about them—out loud, plainly, and without either panic or PR gloss.

Q: Isn’t AI just another burden—more screens, more alerts, more tech in the room?

A: It depends on the tool—and the way it’s used. Badly designed AI systems can absolutely create more noise. But the best AI in medicine works invisibly: auto-drafting notes, summarizing histories, flagging key risks, reducing the number of clicks, not increasing them. In truth, when done right, AI removes distractions, giving doctors more time to connect, not less. The point is not more tech—but smarter tech that gets out of the way.

Q: What if AI gets it wrong? Don’t I (the doc) still carry the liability?

A: Yes—and I should. AI isn’t infallible. But neither is the human brain. The goal is not blind trust. It’s informed collaboration. Think of AI not as a verdict machine, but as a second opinion—always available, rarely exhausted, and better at data retrieval than any of us made of good-old-fashioned meat could ever be. I’m still the captain. AI is just my well-read navigator.

Q: Won’t AI de-skill doctors over time, like GPS did to map-reading?

A: Only if we let it. But in reality, AI can actually re-skill physicians by exposing them to patterns, conditions, and decision frameworks they might otherwise never encounter. Rather than turning off our brains, it pushes us to ask better questions and defend our choices. That’s not dumbing down medicine—it’s sharpening it.

Q: What about the human element? Isn’t empathy the real work of doctoring?

A: Absolutely. And that’s exactly why we need tools that offload the cognitive clutter. AI doesn’t replace the physician’s warmth, presence, or intuition. It creates space for those things. When doctors aren’t buried in documentation or second-guessing a rare side effect, they’re freer to be what patients actually want: fully present and emotionally available.

Q: Are we heading toward a world where AI replaces us?

A: Only if we reduce medicine to decision trees and checklists. But that’s not what good care is—and not what good AI aims to be. The best physicians won’t be replaced by machines. They’ll be replaced by physicians who know how to work with machines. Think augmentation, not automation.

Q: Is this really worth all the trouble?

A: Let me answer with a simple truth: when a tool can help you catch a diagnosis you might have missed, help your patient understand their condition better, and lighten the load on your most exhausting days—it’s not trouble. It’s part of what makes tomorrow’s medicine more human than today’s.

The Moral Case for AI in Medicine

There’s a moment every doctor remembers: the case that haunts a little. The missed diagnosis. The vague “keep an eye on it” that turned out to be something serious. The patient who didn’t speak up until it was too late, or the result that came in just after you signed off.

These aren’t failures of effort. They’re artifacts of a system built on human limits.

And those limits are the reason AI in medicine is not just a convenience—it’s becoming a moral imperative.

When we have tools that:

♦︎ Widen the differential diagnosis,

♦︎ Surface red flags early,

♦︎ Catch subtle signals,

♦︎ Communicate more clearly to patients, and

♦︎ Relieve the mental load that leads to error…

…then the decision not to use them becomes something more than personal preference.

It becomes a choice with consequences. And when the stakes are life and health, consequences matter.

This doesn’t mean we throw every machine into every decision. Or that we trust AI over our own judgment. But it does mean we start redefining what responsible, modern medicine looks like.

We’ve long accepted that a physician who ignores best practices is practicing below the standard of care. Well—what happens when AI is the best practice?

A Future Worth Practicing In

Let’s be clear: AI won’t heal anyone. It doesn’t soothe. It doesn’t sit by a bedside. It doesn’t hear the things a patient can’t quite say out loud.

But it does help you listen better. Prepare better. Interpret better. Teach better.

And that means something.

In an era where patients expect personalization, where data is overwhelming, and where burnout threatens the very fabric of the profession—AI is a partner in resilience. In clarity. In thinking better, not faster.

This is not about replacing the doctor.

It’s about replacing the fantasy that the doctor should have to do it all alone.

Coming Soon:

In my next post, I’m going to share a clinician-oriented guide: how to use AI in practice without losing your clinical identity, how to avoid common pitfalls, and how to preserve your humanity in a digitized world.

For the non-clinicians who are curious, feel free to read what I think my colleagues might be missing, and how I try to teach about it!

Until then, let’s all stay curious. Let’s be open. And let’s not miss the opportunity to evolve—not away from medicine, but deeper into its potential.

FAQ – AI in Medicine: Good, Great, Bad, Catastrophic?

Q1: Isn’t AI just another tech trend that’ll fizzle out like Google Glass?

Maybe if it were just a gimmick. But AI in medicine isn’t about hype—it’s already under the hood of your hospital’s radiology suite, your EHR’s flag system, and half the patient portals your staff quietly curse. This isn’t vaporware. It’s embedded infrastructure. Think less Snapchat filter, more stethoscope evolution.

Q2: How do we know AI isn’t making things worse, just faster?

That’s a fair fear—and a real risk. If AI is poorly trained, biased, or left unsupervised, it absolutely can accelerate mistakes. But when used right, it’s not speeding up error—it’s scaffolding thinking. It doesn’t make you smarter; it lets you be smarter, more consistently, even on your third shift in a row.

Q3: Isn’t it dangerous to rely on AI for diagnosis?

It would be—if we were outsourcing judgment. But we’re not. We’re inviting a second brain to the table, one that never forgets rare syndromes or recent studies buried in a paywalled journal. You’re still the doctor; AI is just whispering, “Hey, don’t forget Still’s Disease,” when your brain is leaning toward “probably stress.”

Q4: Doesn’t AI kill the art of medicine?

Only if the “art” you’re referring to is illegible notes and guessing lab orders by feel. The real art—the careful listening, the gut check, the meaning-making—that’s yours and yours alone. AI doesn’t do nuance. It does volume. You paint; it hands you a cleaner brush.

Q5: Can’t doctors just think for themselves? Isn’t that what they’re trained for?

Sure. And chefs can chop vegetables by hand, but most still use sharp knives. AI isn’t a crutch—it’s a sharper knife. You still cook the meal. It just helps you not cut off a finger in the process.

Q6: What if AI disagrees with me? Should I be worried or offended?

Neither. You should be curious. If AI throws out an idea you didn’t consider, that’s an opportunity—not an insult. Think of it like a very nerdy, slightly robotic resident: sometimes brilliant, sometimes wrong, but always worth checking before you dismiss.

Q7: Will AI deskill the next generation of doctors?

Not if we train them right. In fact, it might do the opposite—push them to ask better questions, think more critically, and stop treating memorization like the holy grail. AI can be a shortcut to insight, not laziness, if we teach our students that their job is interpretation, not regurgitation.

Q8: Aren’t you just hyping this because it sounds futuristic and fancy?

Honestly? No. It’s not the future. It’s the present—and it’s not that fancy. Some of the best AI tools are quietly solving real problems behind the scenes, like reducing sepsis deaths, catching drug interactions, and cleaning up documentation. If anything, the hype is late.

Q9: What’s the risk of not using AI in medicine?

The same risk we had when people resisted handwashing. You might do okay, until you don’t. The real danger is complacency—believing that your memory, your training, and your intuition alone can manage today’s complexity. You wouldn’t run a marathon without shoes. Why practice modern medicine without support?

Q10: Be honest—does using AI make me a better doctor?

No. You make you a better doctor. But AI gives you bandwidth, backup, and a second set of eyes when yours are tired or tunneled. It’s not a magic wand—but it might be the assistant you didn’t know you needed. And it never asks for coffee.